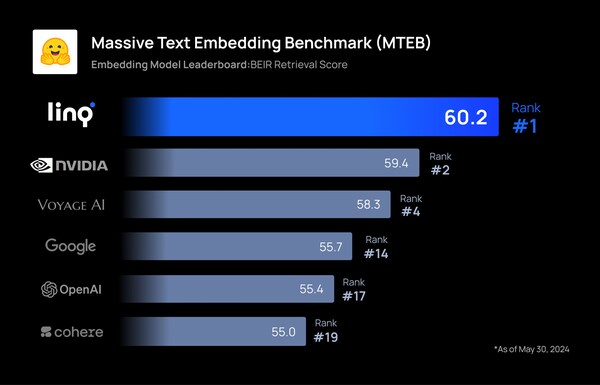

BOSTON, June 5, 2024 /PRNewswire/ -- Linq, a generative AI startup, announced that its large embedding model "Linq-Embed-Mistral" ranked first in the text retrieval evaluation on HuggingFace's "Massive Text Embedding Benchmark (MTEB)" leaderboard, outpacing competitors like NVIDIA, Salesforce, Google, OpenAI, and Cohere. This evaluation is run by HuggingFace, the world's largest machine learning platform.

Linq's embedding model achieved a score of 60.2 points in the text retrieval category, securing the top position. This placed Linq ahead of NVIDIA, which scored 59.4 points, and Voyage AI, which scored 58.3 points. Google's model followed with a score of 55.7, while OpenAI and Cohere scored 55.4 and 55.0 points, respectively.

The MTEB leaderboard by HuggingFace ranks the performance of embedding models across seven categories, including classification, clustering, pair classification, reranking, retrieval, semantic textual similarity (STS), and summarization. Linq's embedding model demonstrated excellent performance not only in the text retrieval category but also in other categories, earning an overall rank of third.

The MTEB lists more than 300 embedding models, highlighting the competitive yet manageable landscape of embedding model technology. Linq's top performance in this specific benchmark underscores its superiority in embedding model technology.

Embedding models are critical in generative AI, particularly for addressing the hallucination problem of large language models (LLMs) by employing retrieval-augmented generation (RAG) technology. RAG allows models to produce reliable outputs by accessing the latest data or internal documents not available within the LLM.

Leading this project, Dr. Junseong Kim stated, "Our research demonstrates that due to the broad topic diversity and challenging difficulty of retrieval data, GPT-generated data is not perfect and requires thorough verification and refinement. Through these processes, we can achieve quality comparable to human-labeled data, ultimately attaining the best retrieval performance based on the MTEB benchmark dataset. This study shows that through elaborate data crafting and filtering using GPT, we can create models optimized for retrieval-augmented generation (RAG) and maximize performance in specific fields." Additionally, he emphasized, "Not only is refined data crucial, but optimized training methodologies and rapid experimental cycles are also key to maximizing retrieval performance."

Linq's Co-founder & CEO, Jacob Choi, emphasized, "Accurate search is crucial for generative AI enterprises' adoption. We're proud to have developed the core embedding model to achieve this, and we'll keep expanding and refining it to ensure precise text searches in specialized fields like finance and legal." Choi noted that while 2023 saw the rise of B2C use cases for generative AI with the advent of ChatGPT, 2024 will witness the growth of B2B (business-to-business) applications with improved accuracy and security technologies.

[Company Description]

Founded in 2022, Linq (Wecover Platforms Inc) was established by MIT Electrical and Computer Engineering graduate Jacob Choi and MIT Computational Science and Engineering Ph.D. Subeen Pang. In 2021, Choi was named in Forbes' "30 Under 30" in the science category for his AI neuromorphic computing research. Linq received early investments from KakaoVentures, Smilegate Investment, and Yellowdog in 2022. In 2023, Linq won the Samsung Open Collaboration hosted by Samsung Financial Networks and was selected for MassChallenge Fintech cohort, the largest non-equity accelerator in the U.S., continuing its collaboration with KPMG US.

Contact: Jacob Choi (jacob.choi@getlinq.com)

source: Linq (Wecover Platforms Inc)

【你點睇?】民主派初選案,45名罪成被告判囚4年2個月至10年不等,你認為判刑是否具阻嚇作用?► 立即投票